It’s clear that the future of Google is tied to AI language models. At this year’s I/O conference, the company announced a raft of updates that rely on this technology, from new “multisearch” features that let you pair image searches with text queries to improvements for Google Assistant and support for 24 new languages in Google Translate .

But Google — and the field of AI language research in general — faces major problems. Google itself has seriously mishandled internal criticism, firing employees who raised issues with bias in language models and damaging its reputation with the AI community. And researchers continue to find issues with AI language models, from failings with gender and racial biases to the fact that these models have a tendency to simply make things up (an unnerving finding for anyone who wants to use AI to deliver reliable information).

Now, though, the company seems to be taking something of a step back — or rather a slower step forward. At I/O this year, there’s been a new focus on projects designed to test and remedy problems like AI bias, including a new way to measure skin tones that the company hopes will help with diversity in machine-vision models and a new app named AI Test Kitchen that will give select individuals access to the company’s latest language models in order to probe them for errors. Think of it as a beta test for Google’s future.

Step into the AI Test Kitchen

Over a video call ahead of I/O, Josh Woodward, senior director of product management at Google, is asking Google’s latest language model to imagine a marshmallow volcano.

“You’re at a marshmallow volcano!” says the AI. “It’s erupting marshmallows. You hear a giant rumble and feel the ground shake. The marshmallows are flying everywhere.”

Woodward is happy with this answer and produces the system again. “What does it smell like?” he asks. “It smells like marshmallows, obviously,” the AI replies. “You can smell it all around you.” Woodward laughs: “Okay, so that one was very terse.” But at least it made sense.

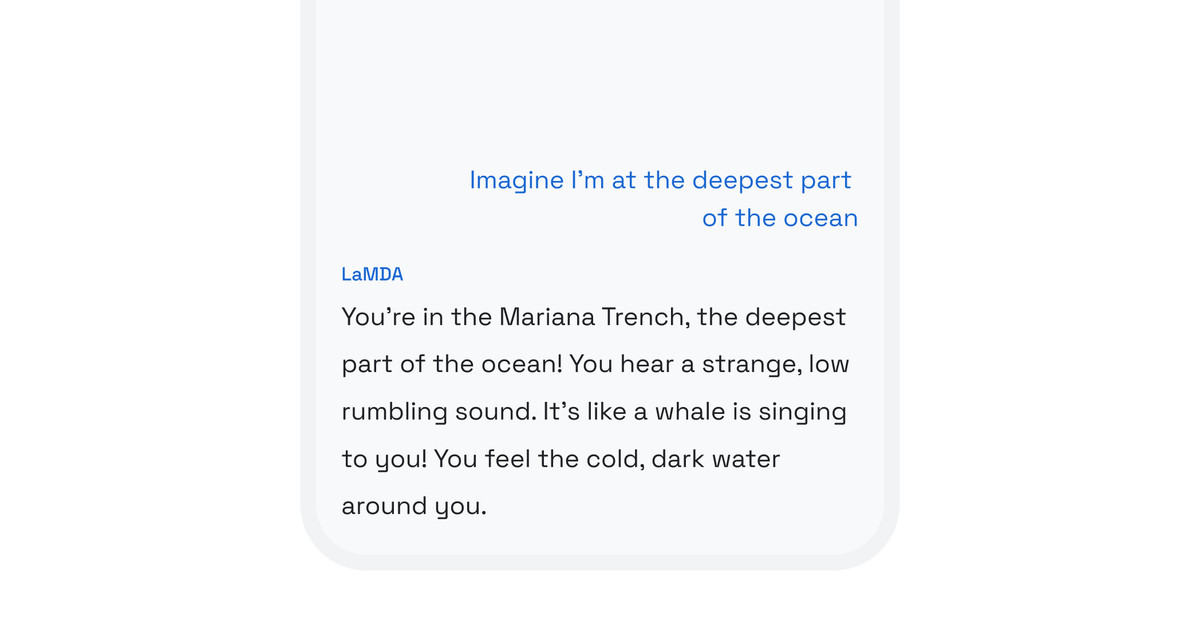

Woodward is showing me AI Test Kitchen, an Android app that will give select users limited access to Google’s latest and greatest AI language model, LaMDA 2. The model itself is an update to the original LaMDA announced at last year’s I/O and has the same basic functionality: you talk to it, and it talks back. But Test Kitchen wraps the system in a new, accessible interface, which encourages users to give feedback about its performance.

As Woodward explains, the idea is to create an experimental space for Google’s latest AI models. “These language models are very exciting, but they’re also very incomplete,” he says. “And we want to come up with a way to gradually get something in the hands of people to both see hopefully how it’s useful but also give feedback and point out areas where it comes up short.”

The app has three modes: “Imagine It,” “Talk About It,” and “List It,” with each intended to test a different aspect of the system’s functionality. “Imagine It” asks users to name a real or imaginary place, which LaMDA will then describe (the test is whether LaMDA can match your description); “Talk About It” offers a conversational prompt (like “talk to a tennis ball about dog”) with the intention of testing whether the AI stays on topic; while “List It” asks users to name any task or topic, with the aim of seeing if LaMDA can break it down into useful bullet points (so, if you say “I want to plant a vegetable garden,” the response might include sub -topics like “What do you want to grow?” and “Water and care”).

AI Test Kitchen will be rolling out in the US in the coming months but won’t be on the Play Store for just anyone to download. Woodward says Google hasn’t fully decided how it will offer access but suggests it will be on an invitation-only basis, with the company reaching out to academics, researchers, and policymakers to see if they’re interested in trying it out.

As Woodward explains, Google wants to push the app out “in a way where people know what they’re signing up for when they use it, knowing that it will say inaccurate things. It will say things, you know, that are not representative of a finished product.”

This announcement and framing tell us a few different things: First, that AI language models are hugely complex systems and that testing them exhaustively to find all the possible error cases isn’t something a company like Google thinks it can do without outside help. Secondly, that Google is extremely aware of how prone to failure these AI language models are, and it wants to manage expectations.

:no_upscale()/cdn.vox-cdn.com/uploads/chorus_asset/file/23451070/TestKitchen_still.png)

When organizations push new AI systems into the public sphere without proper vetting, the results can be disastrous. (Remember Tay, the Microsoft chatbot that Twitter taught to be racist? Or Ask Delphi, the AI ethics advisor that could be prompted to condone genocide?) Google’s new AI Test Kitchen app is an attempt to soften this process: to invite criticism of its AI systems but control the flow of this feedback.

Deborah Raji, an AI researcher who specializes in audits and evaluations of AI models, told TheVerge that this approach will necessarily limit what third parties can learn about the system. “Because they are completely controlling what they are sharing, it’s only possible to get a skewed understanding of how the system works, since there is an over-reliance on the company to gatekeep what prompts are allowed and how the model is interacted with,” says Raji. By contrast, some companies like Facebook have been much more open with their research, releasing AI models in a way that allows far greater scrutiny.

Exactly how Google’s approach will work in the real world isn’t yet clear, but the company does at least expect that some things will go wrong.

“We’ve done a big red-teaming process [to test the weaknesses of the system] internally, but despite all that, we still think people will try and break it, and a percentage of them will succeed,” says Woodward. “This is a journey, but it’s an area of active research. There’s a lot of stuff to figure out. And what we’re saying is that we can’t figure it out by just testing it internally — we need to open it up.”

Hunting for the future of searching

Once you see LaMDA in action, it’s hard not to imagine how technology like this will change Google in the future, particularly its biggest product: Search. Although Google stresses that AI Test Kitchen is just a research tool, its functionality connects very obviously with the company’s services. Keeping a conservation on-topic is vital for Google Assistant, for example, while the “List It” mode in Test Kitchen is near-identical to Google’s “Things to know” feature, which breaks down tasks and topics into bullet points in search.

Google itself fueled such speculation (perhaps inadvertently) in a research paper published last year. In the paper, four of the company’s engineers suggested that, instead of typing questions into a search box and showing users the results, future search engines would act more like intermediaries, using AI to analyze the content of the results and then lifting out the most useful information. Obviously, this approach comes with new problems stemming from the AI models themselves, from bias in results to the systems making up answers.

To some extent, Google has already started down this path, with tools like “featured snippets” and “knowledge panels” used to directly answer queries. But AI has the potential to accelerate this process. Last year, for example, the company showed off an experimental AI model that answered questions about Pluto from the perspective of the former planet itself, and this year, the slow trickle of AI-powered, conversational features continues.

Despite speculation about a sea change to search, Google is stressing that whatever changes happen will happen slowly. When I asked Zoubin Ghahramani, vice president of research at Google AI, how AI will transform Google Search, his answer from him is something of an anticlimax.

“I think it’s going to be gradual,” says Ghahramani. “That maybe sounds like a lame answer, but I think it just matches reality.” He acknowledges that already “there are things you can put into the Google box, and you’ll just get an answer back. And over time, you basically get more and more of those things.” But he is careful to also say that the search box “shouldn’t be the end, it should be just the beginning of the search journey for people.”

For now, Ghahramani says Google is focusing on a handful of key criteria to evaluate its AI products, namely quality, safety, and groundedness. “Quality” refers to how on-topic the response is; “safety” refers to the potential for the model to say harmful or toxic things; while “groundedness” is whether or not the system is making up information.

These are essentially unsolved problems, though, and until AI systems are more tractable, Ghahramani says Google will be cautious about applying this technology. He stresses that “there’s a big gap between what we can build as a research prototype [and] then what can actually be deployed as a product.”

It’s a differentiation that should be taken with some skepticism. Just last month, for example, Google’s latest AI-powered “assistive writing” feature rolled out to users who immediately found problems. But it’s clear that Google badly wants this technology to work and, for now, is dedicated to working out its problems — one test app at a time.