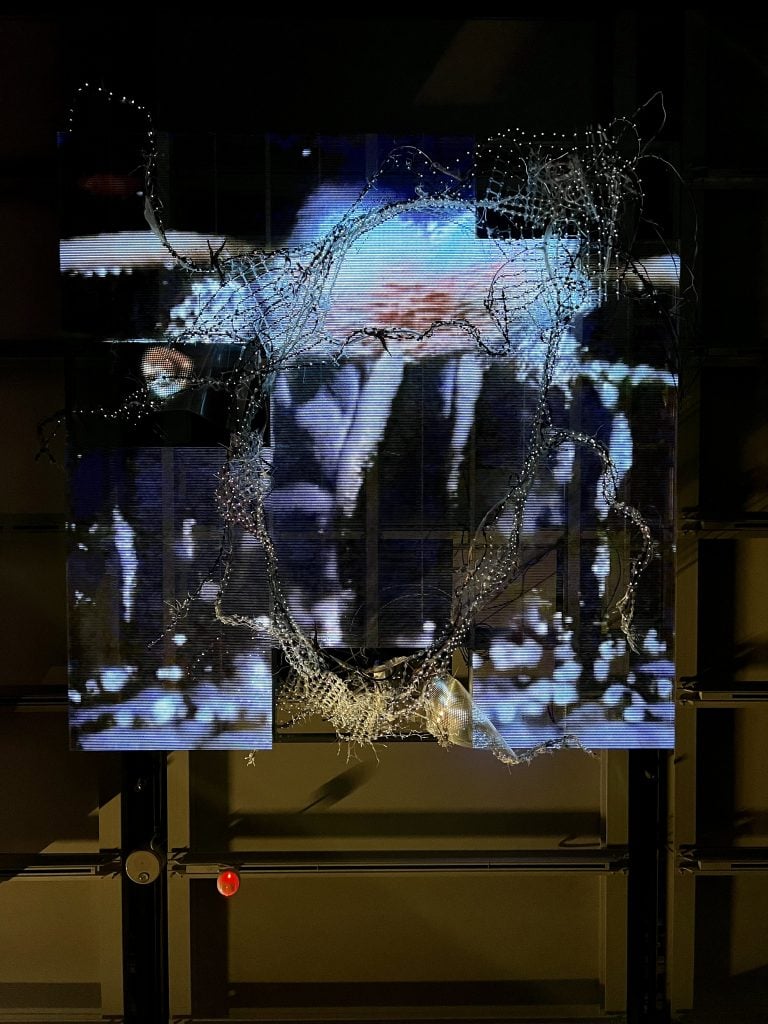

In the darkened halls of the sixth floor of the Whitney Biennial, viewers will come across a quiet alcove with comfortable couches positioned beneath gently glowing LED screens and surrounded by sculptural netting. The pulsating display is the work of artist WangShui—and of artificial intelligence.

When it comes to applications of AI in art, most have been more impressive technologically than artistically. But WangShui’s work by her at the Whitney Biennial represents a more sophisticated meeting of art and technology, where the artist has programmed AI to help create landscapes of otherworldly beauty.

Their contribution to the nation’s leading biennial serves as a reminder that as our increasingly plugged in world becomes even more technology dependent, artists are finding exciting ways to harness the power for art.

We spoke with WangShui about “collaborating” with AI, and how their biennial installation—as soothing as it is high-tech—interacts with viewers in the galleries.

Work by WangShui at the Whitney Biennial. Photo courtesy of the artist.

How did you begin to incorporate artificial intelligence into your work and what initially interested you about it?

So many arms of my practice naturally converged and coalesced in AI. It’s been a long slow approach that wasn’t at all intentional. For example, I recently realized that all of my woven LED video sculptures were attempts to physically interpolate the latent space between pixels. I also found that my research into perception, posthumanism, and neurodivergence all gently entangled under the lens of AI.

The wall text describes the LED ceiling work as “a collage of ‘selfconscious’ generative adversarial networks.” Can you explain what that means?

[The work, titled] Scr∴pe II is composed of various types of GANs that are woven or “collaged” together across various types of LED screens. For example, if you look closely, there are two skin-like LED films metastasized into the parasitic screen which sense and dimensionalize GANs from the main screen into 3D animations. Then there are active sensors in the work that read light levels emitted from the screens themselves and CO2 levels from viewers. This data is fed back into the image generation to effect fun, pace, and even brightness of the videos. At night, when the museum is empty, the work dims and slows down to a state of suspended animation.

Work by WangShui at the Whitney Biennial. Photo courtesy of the artist.

Were you surprised that the Whitney Biennial was interested in showing a work partially authored by artificial intelligence? Do you think we will see more art involving AI at major exhibitions?

AI is already so fundamental to contemporary daily life and thus also to art production. As the technologies advance and become more prevalent they will weave together more seamlessly and become more invisible. In this sense, it’s important for me to represent complex configurations across a myriad of physical and algorithmic structures.

What are some of the challenges you encountered “coauthoring” art with artificial intelligence?

Co-authoring is just another form of circumventing the ego. It’s psychedelic in that sense, so I enjoyed every second. I’m on a mission to deliquesce.

Work by WangShui at the Whitney Biennial. Photo courtesy of the artist.

How often did you have to retrain the algorithm, or try again to generate the result you wanted? Or were there a lot of happy accidents? Would the work appear differently in a less controlled environment?

I think of the training process as a sort of breathwork between me, my incredible programmers Moises Sanabria and Fabiola Larios, and the various AI programs we used. It’s about experimentation, deep learning, and ultimately syncing.

The neural networks are working to generate imagery based on a dataset you provided. What is in that dataset?

Each dataset is a sort of journal for me. They are composed of images and subjects I’m researching at the moment. Each iteration from the “Scr∴ pe” series builds on the previous dataset to develop an ever evolving consciousness. At this point it includes datasets of thousands of images that span deep sea corporeality, fungal structures, cancerous cells, baroque architecture, and so much more.

WangShui, Titration Point (Isle of Vitr∴ous). Photo courtesy of the artist. Photo by Alon Koppel Photography.

WangShui, Titration Point (Isle of Vitr∴ous). Photo courtesy of the artist. Photo by Alon Koppel Photography.

The paintings have a real sense of the artist’s hand. How important was that for you to maintain in a piece that is so closely linked to AI?

Since the paintings are interpolations of my gestures and those of the AI, they are about capturing the process of merging.

What is the painting process like? Do you make marks based on the decisions made by the AI?

The process is a recursive feedback loop. I use AI programs to algorithmically generate new images from my previous paintings which I then sketch, collage, and abrade into the aluminum surfaces. I keep thinking about the term “deep learning” in relation to carefully drawing, mirroring, and abrading lines produced by the AI. I then paint thinly over the abraded images to try and find the sweet spot where the latent space of the oil is revealed through the refractive index below. Of course I improvise all along the way and end up just following the energy.

Work by WangShui at the Whitney Biennial. Photo courtesy of the artist.

What inspired your otherworldly landscape, “the Isle of Vitr∴ous,” and how do you hope viewers experience this space?

I think of these works as a series of post-human cave paintings that mark a specific moment in human evolution. In that sense, “Isle of Vitr∴ous” is sort of my Galapagos, but as a non-place that exists only between structures of perception. Vitreous is the clear gel in mammalian eyes that allows for light to pass through the retina.

People never believe me when I tell them that I look at landscape painting more than any other kind of art, but the AI picked up on it and reflected it back to me. Thetitle, Titration Point (Isle of Vitr∴ous)is about the mountain of tiny scratches I enacted on the aluminum surface as a form of sensory integration between myself and the AI.

“Whitney Biennial 2022: Quiet as It’s Kept” is on view at the Whitney Museum of American Art, 99 Gansevoort Street, New York, April 6–September 5, 2022.

Follow Artnet News on Facebook:

Want to stay ahead of the art world? Subscribe to our newsletter to get the breaking news, eye-opening interviews, and incisive critical takes that drive the conversation forward.

.